Data center energy and AI in 2025

Global data center energy consumption was 240-340 TWh in 2022, but AI is now a major driver of future projections. An update on the 2024 US Data Center Energy report.

The first article I wrote about data center energy was back in 2020: How much energy do data centers use? It remains one of my most popular because it analyzed all the sources I could find (very little information was public then). Much of my research came from the 2016 United States Data Center Energy Usage Report by Shehabi, et al, which was the definitive source at the time.

The Energy Act of 2020 instructed the US Department of Energy to produce an updated report, which was published at the end of 2024. It once again provides a detailed look at data centers in the US.

I have updated my 2020 blog post with all new information from that report, so this is a summary of the key changes. There’s a lot more to write about, but these are the highlights.

Data center energy consumption

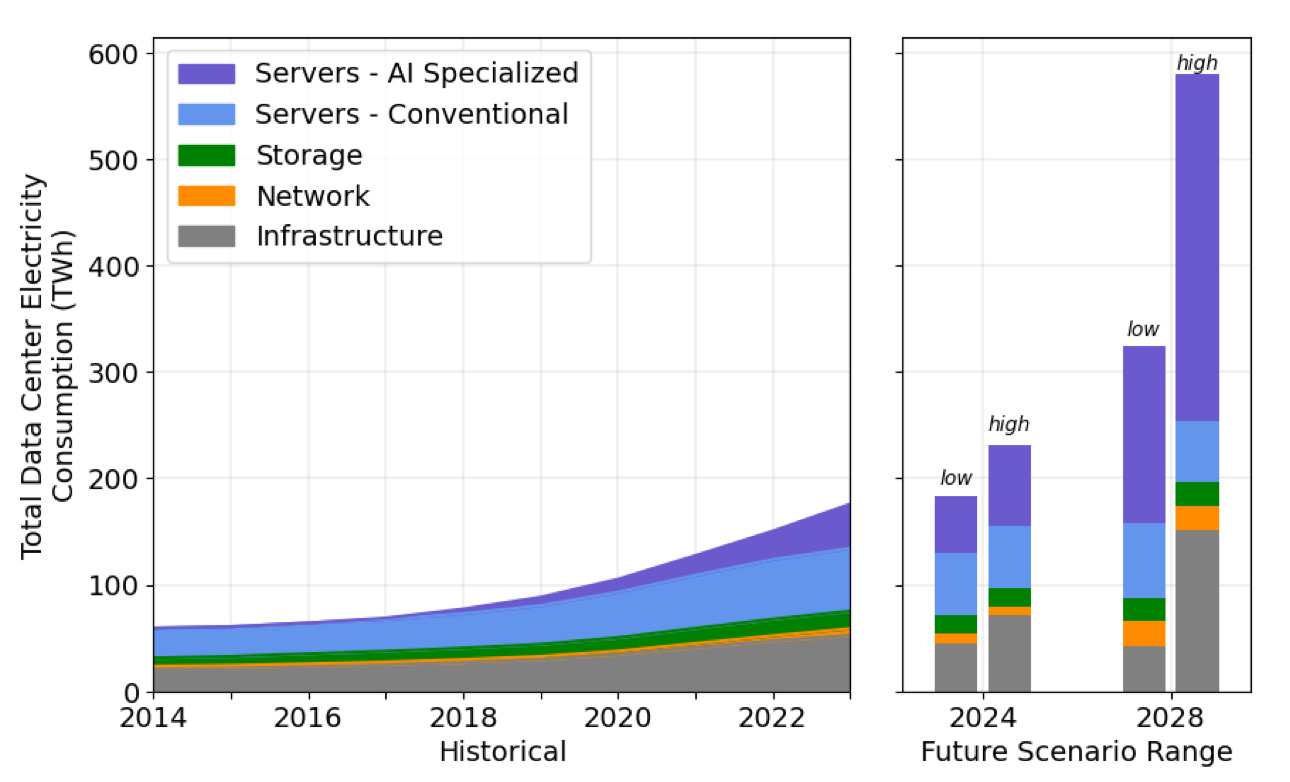

In the United States, data center energy consumption in 2023 is estimated to be 176 terawatt-hours (TWh), or 4.4% of the country’s total electricity consumption. Between 2014 and 2016, consumption remained relatively stable, hovering around 60 TWh. However, it began to increase steadily from approximately 2017 onwards.

Globally, data center energy consumption in 2022 is estimated to be 240 - 340 TWh, accounting for around 1 to 1.3% of total global electricity demand. It’s important to note that this figure excludes networking activities, which were estimated to consume approximately 260 to 360 TWh in 2022. Additionally, cryptocurrency-related energy consumption is estimated to have been around 100 to 150 TWh in 2022.

Although you can find many reports about data center energy, there are only two research groups who produce reliable estimates.

In a review I co-authored, we analyzed 258 data center energy estimates from 46 original publications between 2007 and 2021 to assess their reliability by examining the 676 sources used. This revealed that the only credible global models are from the two research groups represented by Masanet, and Hintemann & Hinterholzer (Borderstep):

Global, 2010 = 193 TWh1

Global, 2018 = 205 TWh2

Global, 2021 = 350-500 TWh3

Global 2022 = 240-340 TWh4

USA, 2018 = 76 TWh5

USA, 2023 = 176 TWh6

The variance in estimates is a major challenge for anyone trying to get to the bottom of how much energy data centers use. This can be seen by plotting the various estimates:

More servers, better at idle, but drawing more power

Power proportionality is key to understanding the efficiency of servers. This scales in proportion to utilization. With perfect power proportionality, a server at 10% utilization will draw 10% of its maximum power. Idle power consumption has been improving over time (idle power is now measured at 20% of the server’s rated power).

Between 2007-2023, the average single socket server drew around 118W and the average dual socket server drew 365 W. However, updated data from Green Grid and reported values from the SPEC database for 2023-2024 show the average dual socket server drawing 600-750 W.

Servers have become better at using less power when idle, but are now drawing significantly more power during usage.

The total number of servers is also increasing and there is a new type - AI related. 6.5 million servers were shipped in 2022, estimated to rise to 7.7 in 2028. This brings the total installed base from 14 million in 2014 to 21 million in 2020. Of those, 1.6 million are related to AI.

Network energy consumption is not proportional to usage

Global network energy consumption was 260 to 360 TWh in 2022, but this is related to the number of ports on a network device, not the usage of the network.

Incorrectly linking data volume to network energy consumption has been a common error in academic literature and mainstream media. Networking is an important part of overall data center energy consumption, but total network energy consumption is not related to the growth in usage like servers.

More hyperscale cloud

Data center efficiency is improving. The average PUE has dropped from 2.5 in 2007 to 1.58 in 2023, however this has been flat for the last few years.

Hyperscale cloud data centers from the likes of Amazon, Google, and Microsoft, are mainly responsible for the overall efficiency improvements. Internal data centers were most common in 2010, with hyperscale and colocation providers making up less than 10% of all deployments. As of 2023, 74% of all servers are now in colocation or hyperscale facilities.

This offsets some of the increased server energy consumption because the facility they are deployed into is more efficient. Google is best in class7 with 1.08 PUE for some of its US data centers and the worst performing still doing very well (1.19 PUE for Singapore).

AI changes things

Although the past two decades have witnessed significant efficiency improvements, AI is going to present challenges.

Panic over data center energy is not new. A peer-reviewed article from 2015 predicted that data centers would consume 1,200 TWh of energy by 2020. As I covered in my data center energy review, this projection proved completely wrong.

I expect the same kind of alarm with AI, especially considering how early we are and how fast things are moving. Last year I wrote about the variables that make it difficult to predict: new models with fewer parameters, more energy efficient models, different data center hardware, and different client hardware.

We can see this is happening with the significantly lower cost of training from the Deepseek model vs what has previously been possible with OpenAI et al:

During the pre-training stage, training DeepSeek-V3 on each trillion tokens requires only 180K H800 GPU hours, i.e., 3.7 days on our cluster with 2048 H800 GPUs. Consequently, our pre- training stage is completed in less than two months and costs 2664K GPU hours. Combined with 119K GPU hours for the context length extension and 5K GPU hours for post-training, DeepSeek-V3 costs only 2.788M GPU hours for its full training. Assuming the rental price of the H800 GPU is $2 per GPU hour, our total training costs amount to only $5.576M.

That is not the fully loaded cost, but considering how much it cost to train the current leading models, this is indicative of the direction things are taking.

Despite advancements in AI energy efficiency, the overall energy consumption is expected to rise due to the substantial increase in usage. A significant portion of the energy consumption surge is attributed to AI-related servers: from 2 TWh in 2017 to 40 TWh in 2023.

This is a big driver behind the projected scenarios in the 2024 US Data Center Energy Report ranging from 325 to 580 TWh (6.7% to 12% of total electricity consumption) in the US by 2028.

With network energy consumption remaining flat or improving, and storage and data center infrastructure energy consumption also showing similar trends, the primary focus for improvements lies in the servers and GPUs that drive the majority of the increase in energy consumption. This is where the opportunity for efficiency lies.

Only for Google owned and operated facilities. This is a big hole in Google’s data because it runs many more data centers leased from the likes of Equinix and Digital Realty, but doesn’t report their PUE.

Thanks a lot for writing this! Very interesting